Prometheus remote write enables the capability to send metrics to a remote Prometheus storage server. This is primarily intended for long term storage.

- By default, Prometheus remote write sends all metrics stored in local Prometheus to a remote server.

- There’s a high likely chance you don’t need to send every metric that you have to a remote server only to increase the storage of that server unnecessarily.

- Another scenario maybe that you’re using remote write to send the metrics to a SaaS vendor like Grafana cloud / Datadog. Keep in mind that they charge for the amount of metrics you send and hence if you don’t cut down on what you send, expect a hefty bill on your way.

In this article, let’s see how to send only metrics from a specific scrape job and also only set of particular metrics that matches a name prefix.

Prerequisite:

- Docker

- Docker-compose

Sending metrics only from a specific scrape job :

- Let’s image a scenario where you’re scraping multiple services, and you want to send all the metrics from one specific job, for the sake of simplicity, let’s say you’re scraping 3 services metrics: Cadvisor, node_exporter & Prometheus itself.

- If you only want to send Cadvisor metrics via remote write and drop all others, then you can specify this via

write_relabel_configsconfig block. - Below is my docker-compose stack which is running 3 services, namely Prometheus, Cadvisor & node_exporter.

version: '3.8'

services:

prometheus:

image: prom/prometheus

container_name: prometheus

ports:

- 9090:9090

command:

- --config.file=/etc/prometheus/prometheus.yml

volumes:

- ./prometheus.yaml:/etc/prometheus/prometheus.yml:ro

depends_on:

- cadvisor

- node_exporter

cadvisor:

image: gcr.io/cadvisor/cadvisor

container_name: cadvisor

ports:

- 8080:8080

volumes:

- /:/rootfs:ro

- /var/run:/var/run:rw

- /sys:/sys:ro

node_exporter:

image: quay.io/prometheus/node-exporter:latest

container_name: node_exporter

command:

- '--path.rootfs=/host'

pid: host

volumes:

- '/:/host:ro'

ports:

- 9100:9100

- Now let’s create a prometheus.yaml config to scrape the above targets and remote write to Grafana Cloud.

# prometheus global config

global:

scrape_interval: 1s

external_labels:

environment: demo

scrape_configs:

- job_name: prometheus

static_configs:

- targets: ['prometheus:9090']

- job_name: cadvisor

static_configs:

- targets: ['cadvisor:8080']

- job_name: node_exporter

static_configs:

- targets: ['node_exporter:9100']

remote_write:

- url: https://your-server-endpoint.grafana.net/api/prom/push

basic_auth:

username: your-username

password: your-grafana-api-key

write_relabel_configs:

- source_labels: [job]

regex: 'cadvisor'

action: keep

- I’ve Chosen Grafana cloud because it's free and very easy to setup for a remote Prometheus server. This demo works for any remote write enabled Prometheus server.

- The magic is happening in

write_relabel_configsblock.source_labels: The source labels select values from existing labels. In our case, all metrics scraped via the job Cadvisor will have the label Cadvisor.regex: this is required is you want to match the metrics / label values returned viasource_labels. In our case, we don't need any regex pattern. Justcadvisorwill do as we need to match exactly that.action: Action to perform based on regex matching, keep or drop. If you mention drop, all Cadvisor metrics will be dropped and all other job metrics will be sent.

- If you mention the action as keep, only Cadvsior metrics will be sent and all other metrics will be dropped.

- You can just change the name to any other job name which you want to send the metrics from.

- Now, you can start the stack by running :

docker-compose up -d

Verification

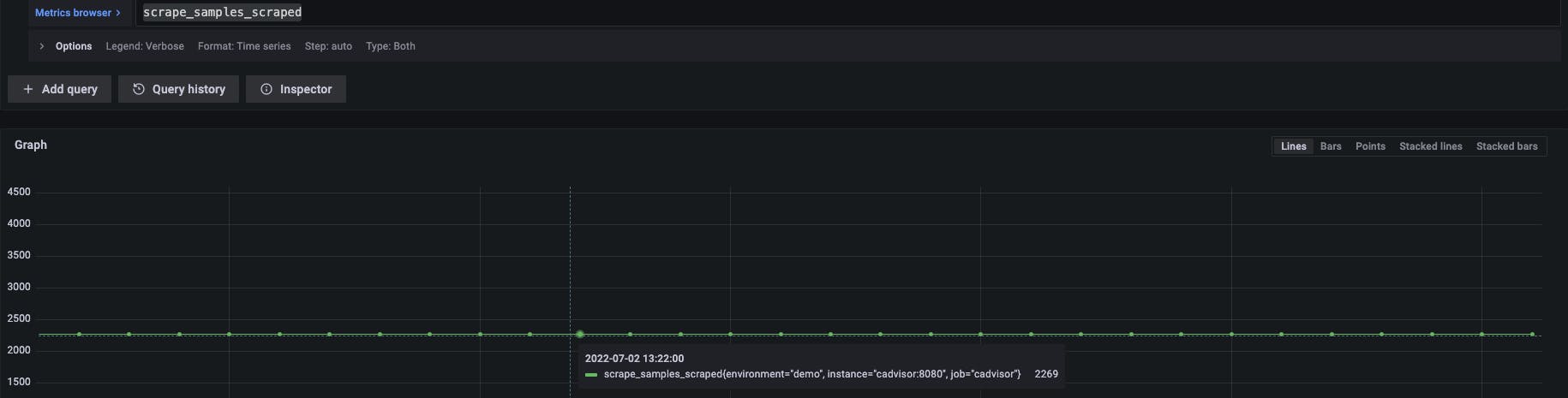

- To verify how many metrics we have scraped from Cadvisor job along with others, query this metric from your local Prometheus :

scrape_samples_scraped

- As we can see, there are 2269 time series from Cadvisor Job.

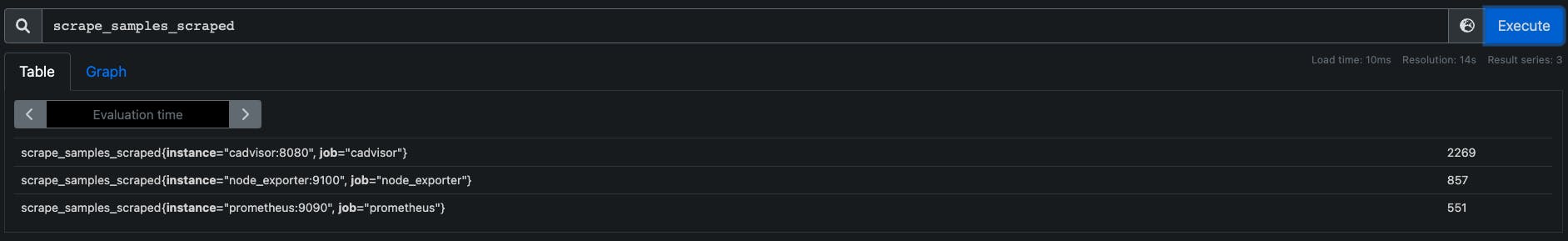

- We can now query the same metric

scrape_samples_scrapedfrom Remote Prometheus, which should result in the same number and should not list any other job in result:

Sending metrics which starts with a prefix :

- For example, If you only want to send metrics starting with

node_memoryi.e. all memory related metrics of a node only, , then you can use the config similar to below in your prometheus.yaml :

write_relabel_configs:

- source_labels: [__name__]

regex: 'node_memory(.*)'

action: keep

- The above config will only send all metrics which starts with

node_memoryprefix and will drop all other metrics.

Tip :

- If you want to check what are the labels available in your Prometheus, there’s an API endpoint exists for that :

/api/v1/labels - So you can make a curl request and get that list like this :

curl -s 'localhost:9090/api/v1/labels' | tr ',' '\n'

#result

"address"

"bios_date"

"job"

...

- Once you know what label you want to query, you can get the values for that specific label, for example for

joblabel, we have 3 values available :

curl -s 'localhost:9090/api/v1/label/job/values’

#result

"data":["cadvisor","node_exporter","prometheus"]