Parsers are an important component of Fluent Bit, with them, you can take any unstructured log entry and give them a structure that makes it easier for processing and further filtering.

In this part of fluent-bit series, we’ll collect, parse and push Apache & Nginx logs to Grafana Cloud Loki via fluent-bit.

Configure docker-compose :

- Let’s create a docker-compose file

docker-compose.yamland add the below config:

version: "3.5"

services:

fluentbit:

image: cr.fluentbit.io/fluent/fluent-bit

ports:

- "24224:24224"

- "24224:24224/udp"

volumes:

- ./:/fluent-bit/etc/

flog:

image: mingrammer/flog

command: '-l'

depends_on:

- fluentbit

logging:

driver: fluentd

options:

tag: apache

nginx:

image: kscarlett/nginx-log-generator

depends_on:

- fluentbit

logging:

driver: fluentd

options:

tag: nginx

- Let’s understand what this docker-stack is doing in detail.

- We have 3 services running in this stack.

fluentbit:- we’re running fluent-bit the latest image & it listens on port

24224. - We’re mounting the local current directory inside the container’s

/fluent-bit/etc/path.

- we’re running fluent-bit the latest image & it listens on port

flog:- flog is a fake Apache log generator service. We’ll use that to collect, parse and filter logs via fluent-bit.

- flog is very useful when you want to enumerate & debug apache based logs locally.

- We’re using

depends_onto indicate that start flog only once fluent-bit is ready. - next we’re mentioning the logging driver for flog container to be

fluentd - Default logging driver for docker logs is JSON. We want to get all container logs hence we’re mentioning logging driver as Fluentd. A point to note here is that both Fluentd & fluent-bit uses Fluentd as docker logging driver.

- in tag:apache, we’re specifying a tag for Fluentd to filter and process later.

nginx-log-generator:- This service is also exactly similar to above-mentioned flog service except it generates logs of nginx web server.

Configure fluent-bit :

- Let’s create a file called

fluent-bit.confand add the below config to it:

[SERVICE]

log_level info

flush 1

[INPUT]

Name forward

Listen 0.0.0.0

port 24224

[FILTER]

Name parser

Match apache

Key_Name log

Parser apache

[FILTER]

Name parser

Match nginx

Key_Name log

Parser nginx

[OUTPUT]

Name loki

Match *

Host "Loki URL here"

port 443

tls on

tls.verify on

http_user "username here"

http_passwd "API-KEY here"

INPUT: since we’re using docker logging driver fluend, we’ll be using forward input plugin to gather all container logs from Docker daemon and source it at fluent-bit.FILTER: We have 2 filters, one for each type of log file Apache and Nginx.- In the first filter, it will look for incoming logs with

tag : nginxand parse those logs with theparser : nginx. Same goes for Apache also. - In the further section, we’ll see how is it parsing the nginx and apache logs using parser config.

- In the first filter, it will look for incoming logs with

OUTPUT: We’re saying send all logs ( apache & nginx, hence the*) to Grafana cloud ( Loki). fluent-bit can also output the filtered logs to multiple destination. You can read more about that here.- hence the output plugin name is :

loki - Loki plugin has a couple of parameters.

hosti.e. the endpoint of your Loki stack. - We don’t want someone to snoop on the logs while sending to Grafana, hence the TLS.

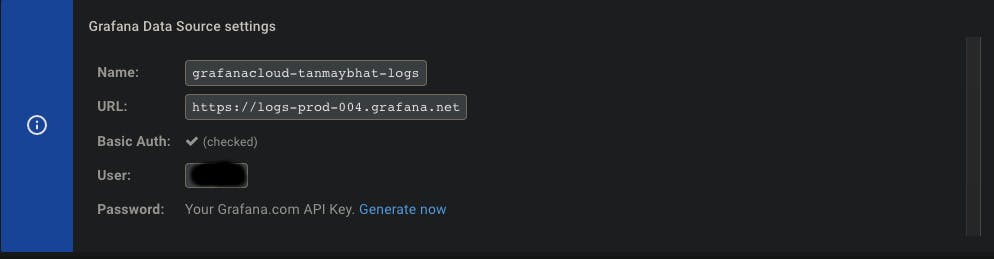

- http_user and http_passwd are

username&API_KEYfor Grafana Cloud.

- hence the output plugin name is :

- Create a file called

parsers.confand append the below config :

[PARSER]

Name apache

Format regex

Regex ^(?<host>[^ ]*) [^ ]* (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name nginx

Format regex

Regex ^(?<remote>[^ ]*) (?<host>[^ ]*) (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

- Parsing is necessary to filter and make some sense out of the logs you collected. We’ll see in the later part the effect of with and without parsing the logs.

Configuring Grafana Cloud

- You can sign up for Grafana Cloud free here

- Once setup, navigate to Loki section under your stack and the details will look like below :

Action time :

- Let’s start out docker-compose stack by running the command :

docker-compose up -d

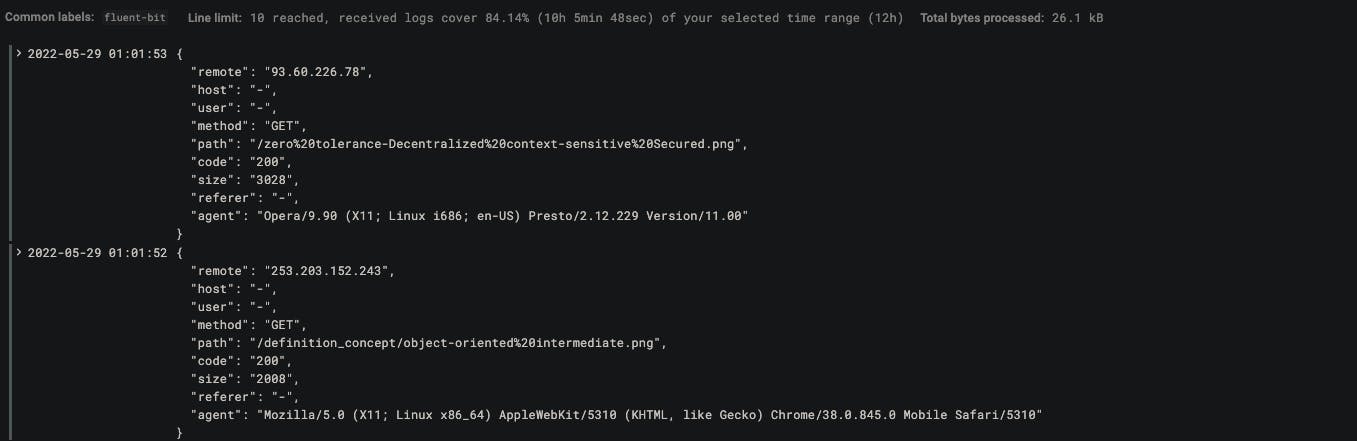

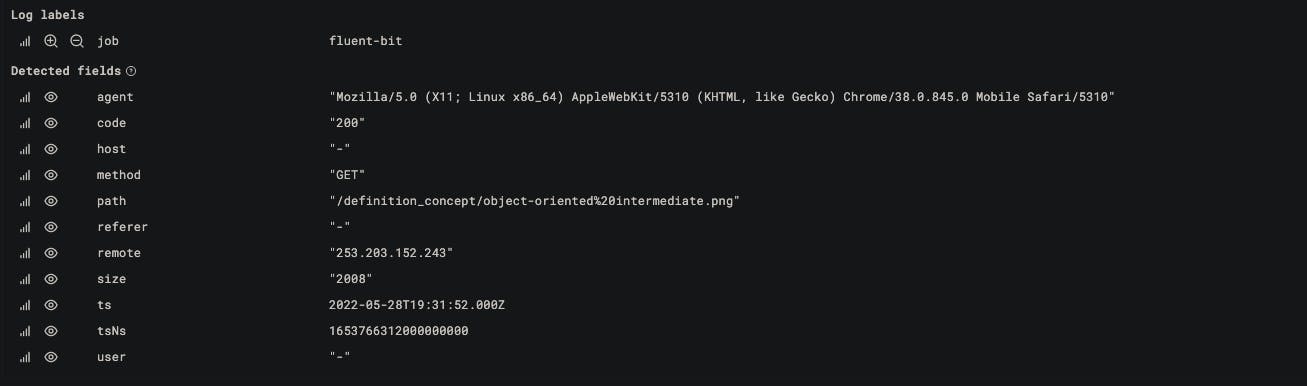

- Once all 3 containers are running, Let’s check Grafana cloud for the logs we pushed. it should look like this :

- Since we have parsing enabled, if you click on the log displayed, each section of the log is parsed and indexed by Loki Which will be extremely beneficial when debugging issues through logs because we’ll be able to write complex queries over the log stream fields.

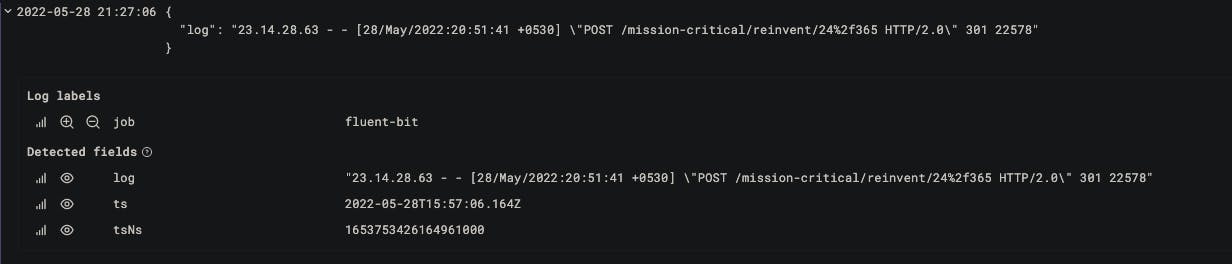

- If you’re wondering how does this look without parsing, you can try that by removing FILTER section in

fluent-bit.conf. Once removed, start the docker-compose. Logs in Loki will look like :

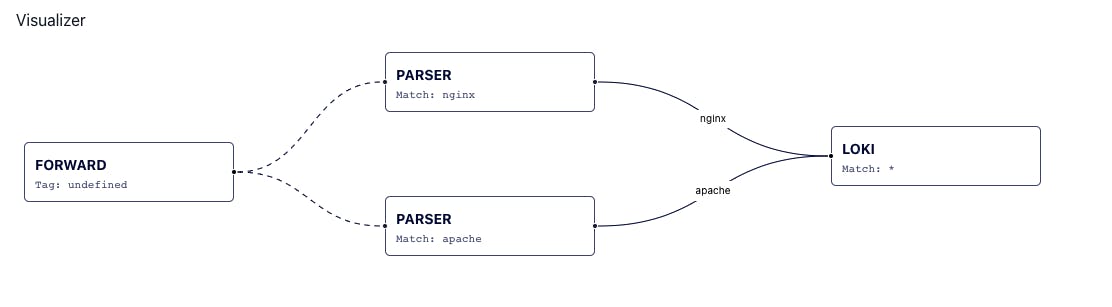

- The data-flow will look like this :

Bonus :

- Fluent-bit can output(send) the logs collected to multiple destinations.

- You can read more about routing logs here.

- For example, for debug purposes, you want the nginx log to be sent to stdout as well along with Grafana, you can add the below OUTPUT config to your

fluent-bit.conf:

[OUTPUT]

Name stdout

Match nginx

[OUTPUT]

Name loki

Match *

Host "Loki URL here"

port 443

tls on

tls.verify on

http_user "username here"

http_passwd "API-KEY here"